There is a famous test, proposed way back in 1950 by the brilliant mathematician Alan Turing, which aims to evaluate whether a machine is capable of exhibiting intelligent behavior to the point of being indistinguishable from that of a human being. It is called the “Turing test” and is considered a milestone in the study of artificial intelligence. Well, it seems that that distant goal has finally been reached, at least according to the results of a recent experiment in which the chatbot GPT-4 managed to be mistaken for a human in 54% of conversations.

A record that beat not only its predecessor GPT-3.5, but also a real participant. A sign that AI is becoming increasingly “human”? Or just the demonstration of how skilled he is at deceiving our perceptions? It is certainly a signal that the dividing line between natural and artificial intelligence will become increasingly thin and blurred. With all the wonders and pitfalls that this entails.

GPT-4 fools more than one in two humans on the Turing Test

The test in question, the results of which were published on the arXiv pre-print platform (I link them here), involved 500 people who were asked to converse for 5 minutes with four different interlocutors: a human and three artificial intelligence systems.

Among these were the “old” ELIZA program, dating back to the 1960s and based on pre-set responses, the GPT-3.5 model and the more advanced GPT-4, the same which is the basis of the much talked about chatbot ChatGPT.

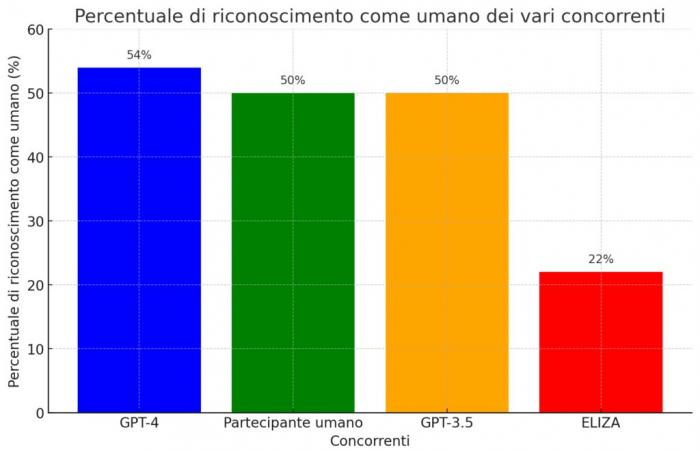

Well, at the end of the conversations, the participants were asked to indicate which interlocutors they considered to be human and which were artificial. And here the surprises emerged: GPT-4 managed to be mistaken for a human in 54% of cases, even a percentage higher than that of the human participant (stationary at 50%). The other two digital “competitors” were clearly separated: GPT-3.5 also stopped at 50%, while the rudimentary ELIZA was judged human in only 22% of conversations.

A sensational result that seems to mark a turning point on the path towards the creation of artificial intelligences that are increasingly similar to human ones. With all the ethical and social questions that this entails.

Not only intelligence, but also empathy and human “flaws”.

But what makes GPT-4 so “human” that it manages to deceive the majority of interlocutors? According to experts, it is not just a question of “intelligence” in the strict sense, understood as the ability to process information and provide relevant answers. There’s much more.

Language models are infinitely flexible, capable of synthesizing answers on a wide range of topics, of speaking particular languages or sociolects, and of representing themselves with a character-inspired personality and values. It’s a huge step forward from something pre-programmed by a human, however skillfully and carefully.

Nell Watsonan AI researcher at the Institute of Electrical and Electronics Engineers (IEEE).

In other words, GPT-4 does not limit itself to exhibiting knowledge and reasoning skills, but also knows how to “put itself in the shoes” of the interlocutor, modulating language and attitude based on the context. Not only that: according to Watson, these advanced AI systems also show typically human traits such as the tendency to confabulate, to be subject to cognitive biases, to be manipulated. All characteristics that make them even more similar to us, defects included. This explains the “triumph” in the Turing test.

New challenges for human-machine interaction

If machines become better and better at appearing human, how will we understand who we are really interacting with? This is one of the questions raised by the study, which fears the risk of growing “paranoia” in online interactions, especially for sensitive or confidential matters.

A scenario not too far from reality, if you think about how many times we interact daily with digital assistants, chatbots and other AI systems without even realizing it. With the risk of being influenced or manipulated without knowing it.

Capabilities are only a small part of AI’s value: their ability to understand the values, preferences and boundaries of others is equally essential. It is these qualities that will allow AI to serve as a faithful and reliable concierge for our lives.

In short, if on the one hand the evolution of systems like GPT-4 opens up exciting scenarios of collaboration between human and artificial intelligence, on the other it requires us to rethink the boundaries and methods of these interactions. To avoid getting lost in a world where reality and fiction become indistinguishable, and suspecting that there is a machine behind every chat.

The reliability of the Turing test in question

Then there is another question raised by the study, Alan’s good soul forbid me: that of the actual validity of the Turing test as a yardstick for artificial intelligence. According to the authors themselves, in fact, the test would be too simplistic in its approach, giving more weight to “stylistic and socio-emotional” factors than to real intellectual abilities.

In other words, systems like GPT-4 would be very good at “seeming” intelligent, perfectly imitating the way humans express themselves and relate, without actually being so. An ability that risks making us overestimate their real “IQ”.

It is no coincidence that the study speaks of “widespread social and economic consequences” linked to the advent of increasingly “human” AI. From the loss of jobs to the alteration of social dynamics, passing through risks of manipulation and disinformation. All questions that require deep reflection on the place we want to give these technologies in our lives.

In short, the “success” of GPT-4 at the Turing test is indeed a historic achievement in the path of artificial intelligence, but it also opens up a series of thorny questions about the relationship between man and machine. Whatever the answer to these questions, the impression is that we will soon ask ourselves whether this answer was given by a real person or not. And this, perhaps, is the real news.